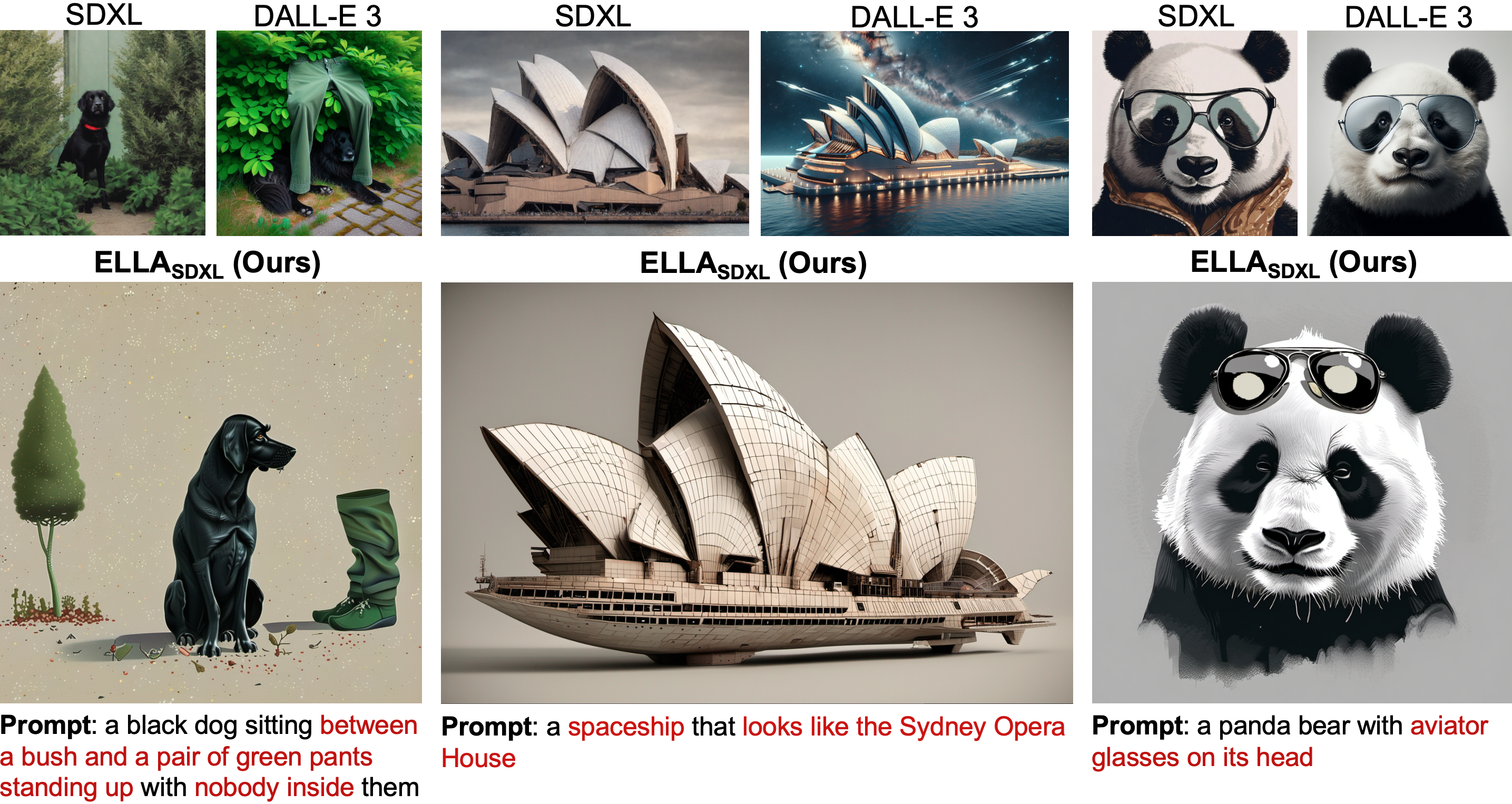

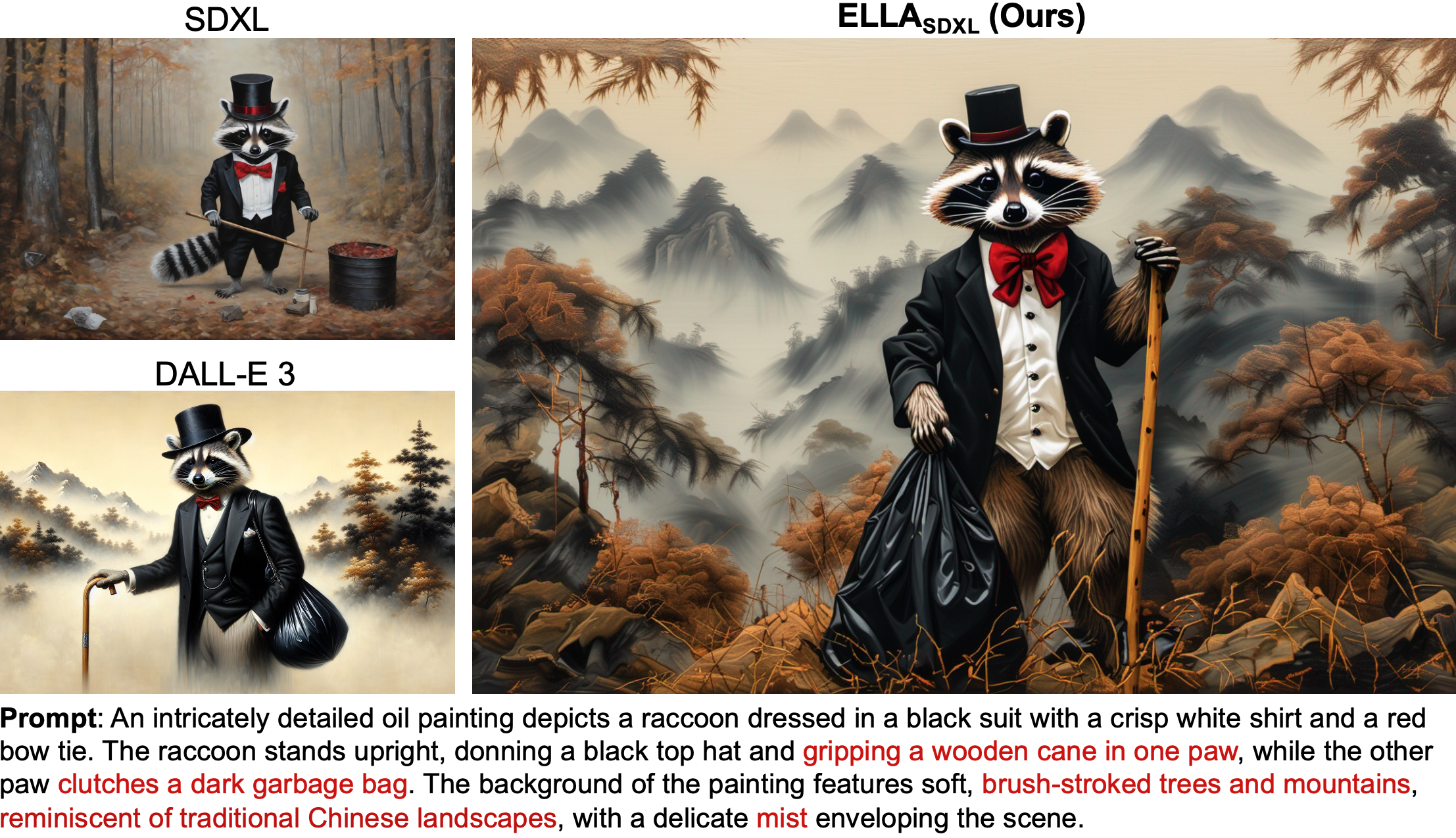

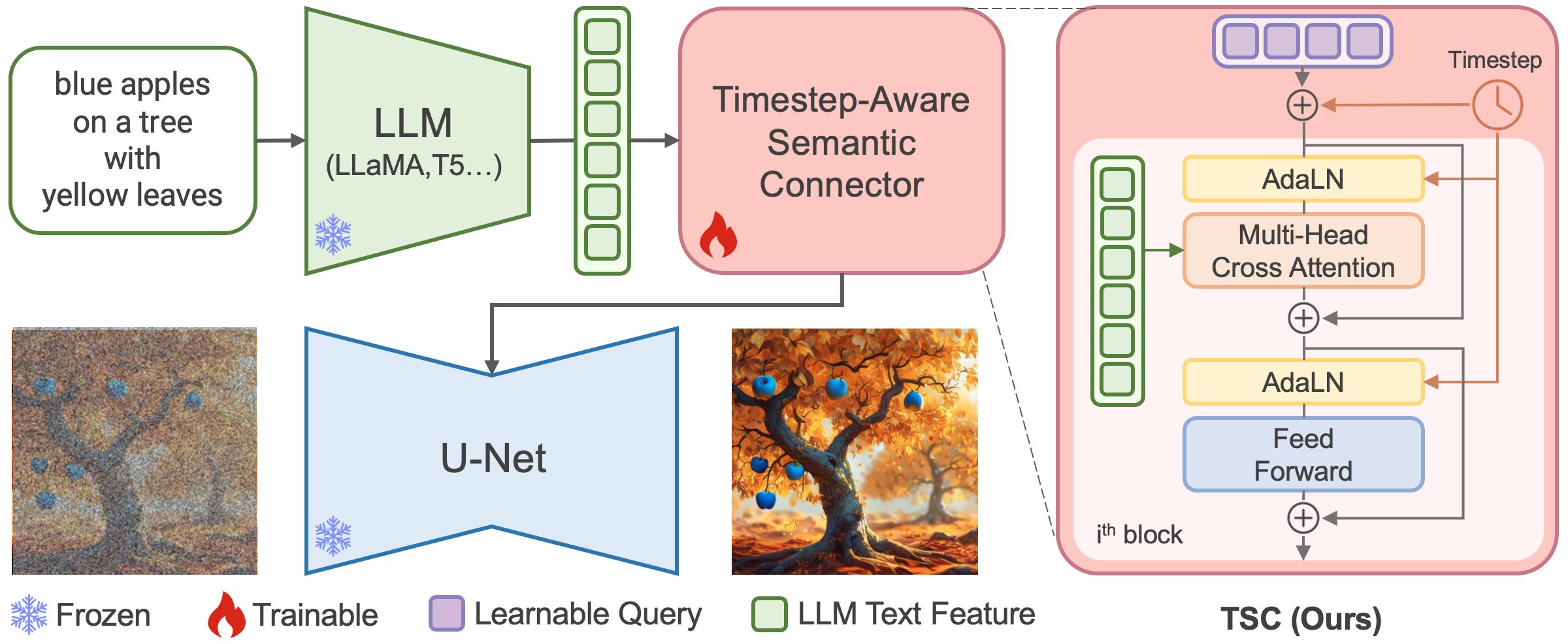

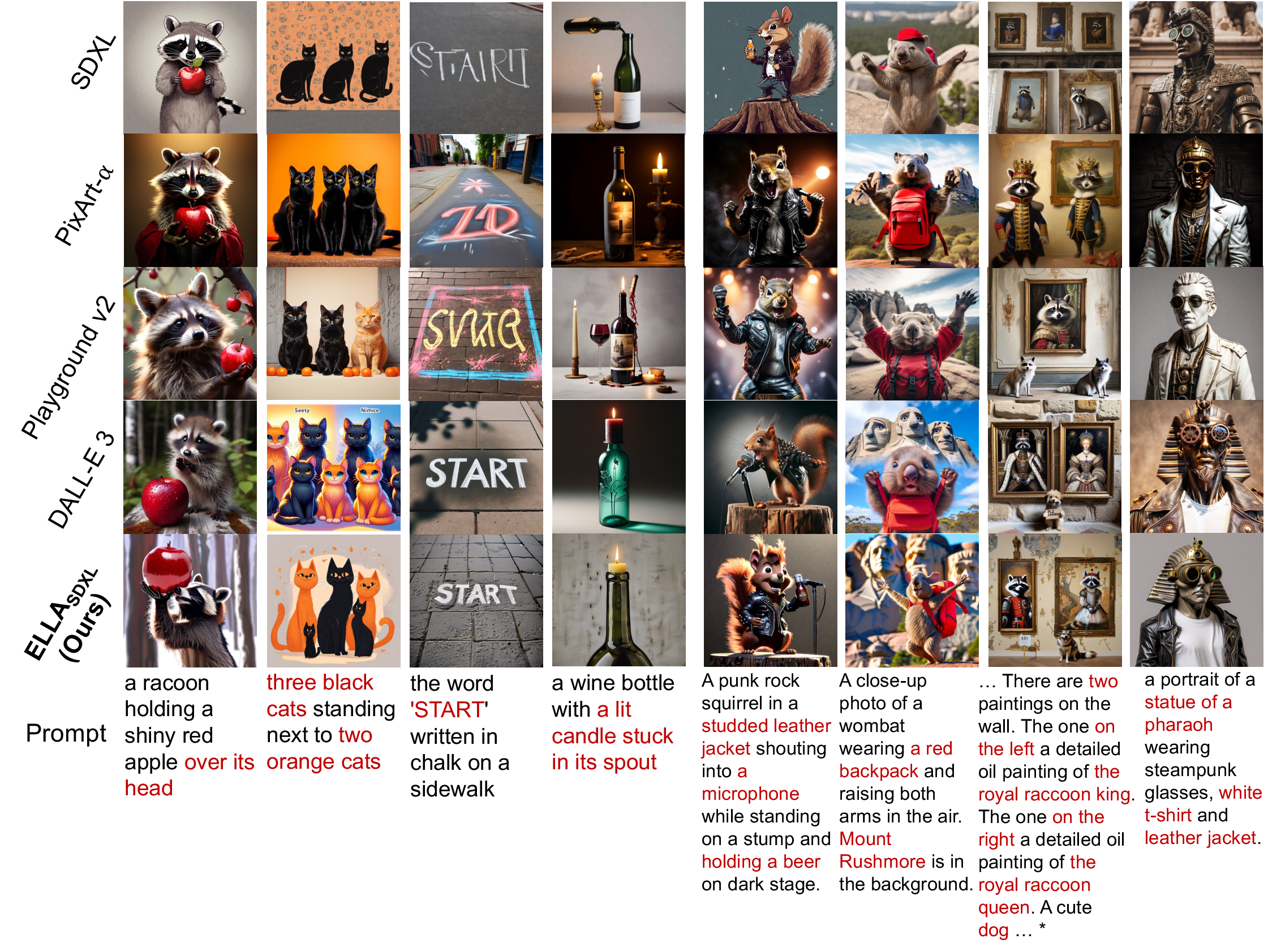

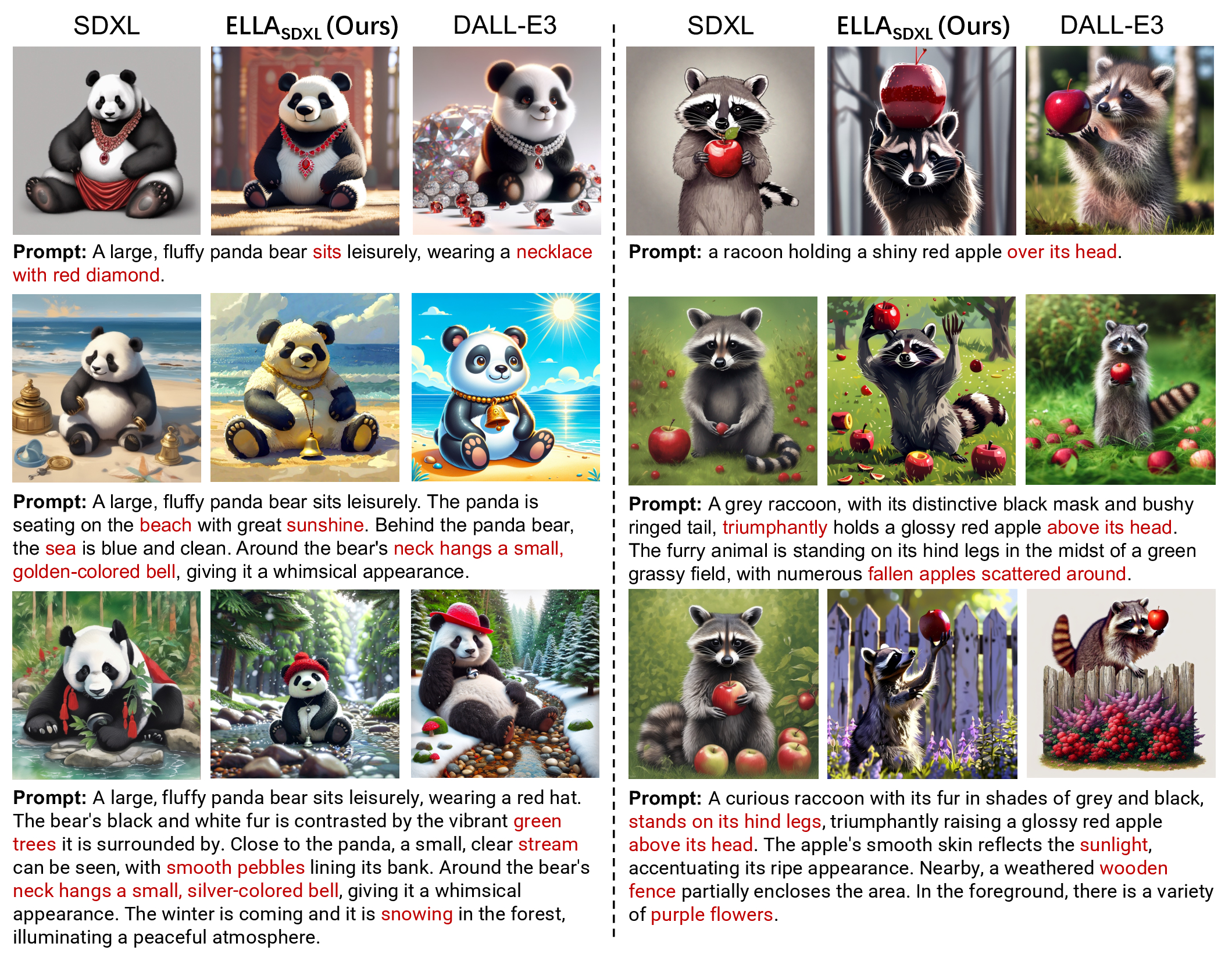

Diffusion models have demonstrated remarkable performance in the domain of text-to-image generation. However, the majority of these models still employ CLIP as their text encoder, which constrains their ability to comprehend dense prompts, which encompass multiple objects, detailed attributes, complex relationships, long-text alignment, etc. In this paper, We introduce an Efficient Large Language Model Adapter, termed ELLA, which equips text-to-image diffusion models with powerful Large Language Models (LLM) to enhance text alignment without training of either U-Net or LLM. To seamlessly bridge two pre-trained models, we investigate a range of semantic alignment connector designs and propose a novel module, the Timestep-Aware Semantic Connector (TSC), which dynamically extracts timestep-dependent conditions from LLM. Our approach adapts semantic features at different stages of the denoising process, assisting diffusion models in interpreting lengthy and intricate prompts over sampling timesteps. Additionally, ELLA can be readily incorporated with community models and tools to improve their prompt-following capabilities. To assess text-to-image models in dense prompt following, we introduce Dense Prompt Graph Benchmark (DPG-Bench), a challenging benchmark consisting of 1K dense prompts. Extensive experiments demonstrate the superiority of ELLA in dense prompt following compared to state-of-the-art methods, particularly in multiple object compositions involving diverse attributes and relationships.

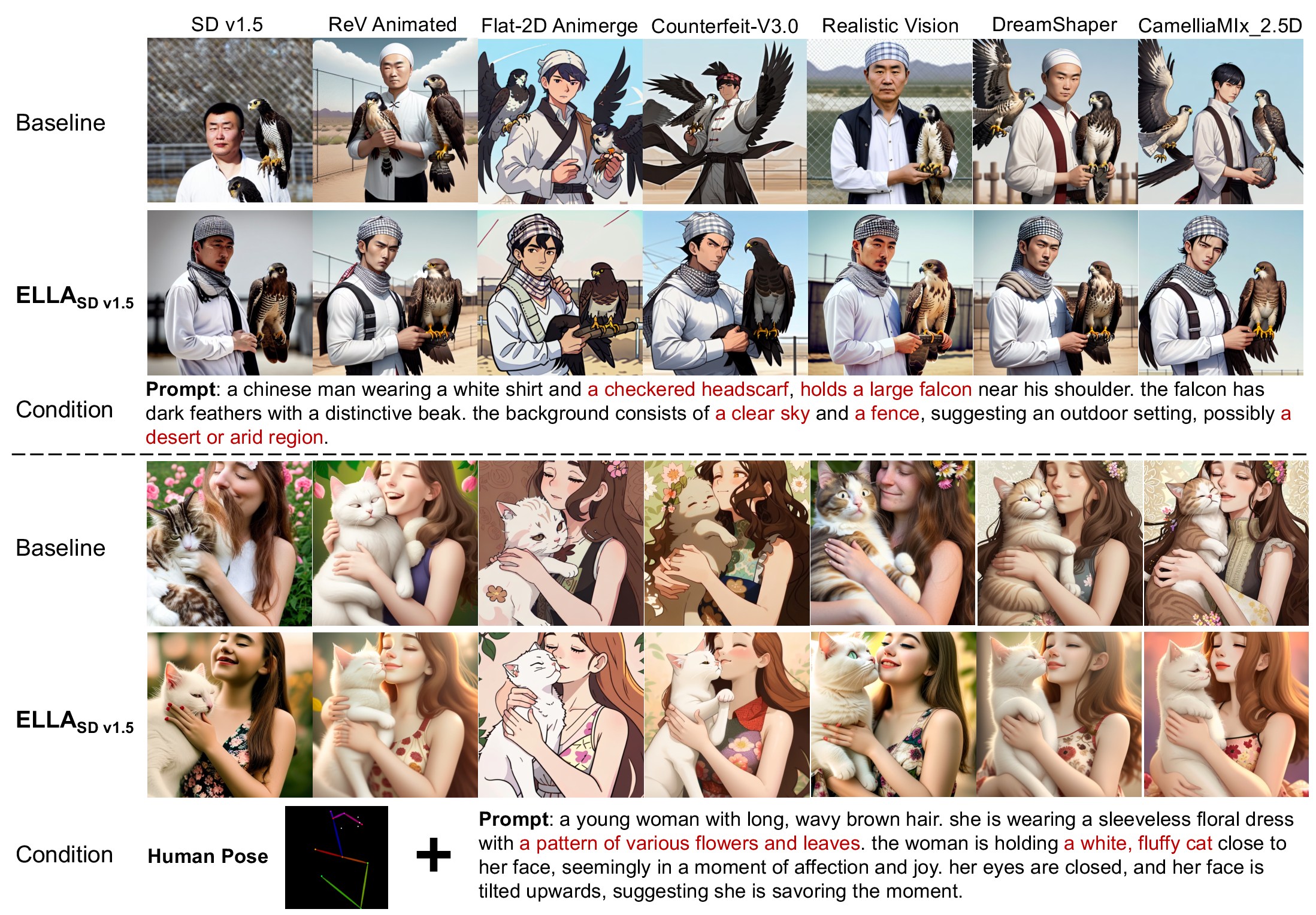

We propose a novel lightweight approach ELLA to equip existing CLIP-based diffusion models with powerful LLM. Without training of U-Net and LLM, ELLA improves prompt-following abilities and enables long dense text comprehension of text-to-image models.

We design a Time-Aware Semantic Connector to extract timestep-dependent conditions from the pre-trained LLM at various denoising stages. Our proposed TSC dynamically adapts semantics features over sampling time steps, which effectively conditions the frozen U-Net at distinct semantic levels.

Once trained, ELLA can seamlessly integrate community models and downstream tools such as LoRA and ControlNet, improving their text-image alignment.